Magento Solutions Partner, Comwrap, Collaborates with Magento on New Asynchronous Bulk API

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The Use Case

For more than eight years, we have been developing Magento-based enterprise e-commerce solutions for a wide variety of customers. Many of them involved customer external ERP systems that were not easy to handle in and with Magento 1. Since the launch of Magento 2 and its amazing API coverage, our lives as Magento developers have become much easier.

Nevertheless, a challenging project last year revealed that the Magento API couldn’t adequately support some of our needs. Our customer’s ERP system was not able to send requests to Magento one-by-one. Instead, it sent requests simultaneously for all objects. This meant that Magento received 1000+ “create product” requests via an API endpoint… and after that, requests to update stocks, prices, tier prices, etc. To make a long story short: there were problems.

In addition to this issue, we also had to deal with:

- The customer's ERP system was not capable of saving and tracking error messages. As a result, we could not retrace how errors occurred and how they could be fixed.

- Sending a large number of requests to create or change products in Magento at the same time resulted in a massive database overload. This caused performance to decrease, affecting conversions as well as the shoppers’ user experience.

- For massive imports, the ERP system had to stay online till the process finished.

Our Idea

As a solution, we created a middleware that catches all the requests to the Magento REST API and forwards them to a RabbitMQ queue. Then it reads the returning messages from RabbitMQ and executes them one by one. In addition, we implemented a log of all actions to easily backtrack any errors. With this internal solution we could solve all problems we had and successfully complete the customer project.

After that experience we got another idea: “Why can’t we do all this directly in Magento?”

So, we contacted Magento Community Engineering team with our proposition and we all agreed that this would be an amazing feature. Then they invited us to participate in the implementation.

Magento Contribution

And …. voilà! We learned that another company, Balance Internet, which faced similar problems, proposed a similar solution to Magento. They were also eager and willing to contribute to the implementation.

That was the beginning of a close cooperation with the Magento team and Balance Internet.

As a first step, the Magento Community Engineering team organized a conference call for all developers who were interested in the implementation. The main topic of this call: to get to know the participants and discuss the project architecture, the main tasks, and the responsibilities. There were lots of subsequent calls to discuss the architecture and the minimum set of features. All the participants provided lots of input. Let me summarize the result of architectural discussions.

Step 1: Architecture

New Endpoints

The main goal of an Asynchronous API is to add REST URL routes so that Magento can distinguish between synchronous and asynchronous calls. At the same time, the developer should do as little as possible to create new asynchronous APIs, so ideally all existing Magento Web APIs should automatically support asynchronous mode.

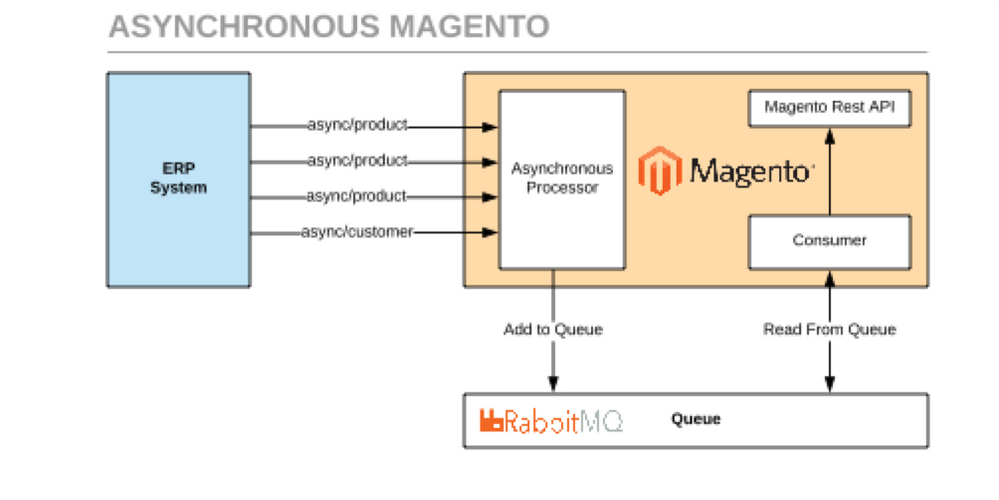

An asynchronous processor sends asynchronous calls to the message queue. Then a consumer reads them from the queue and executes them one-by-one. To distinguish between the two types of calls, we added an “async” prefix.

For example:

POST /async/V1/products PUT /async/V1/products DELETE /async/V1/products

All other API requests stay the same. The parameters logic and request body remain, and they are transferred in a similar manner as a synchronous request.

The complete workflow looks like this:

Asynchronous Responses

By default, for every asynchronous request, Magento creates a bulk operation: an entity that aggregates multiple operations and tracks t aggregated status of them. For a single asynchronous request, bulk consists of one operation only. However, as a result of an asynchronous request, Magento returns a generated bulk UUID which can later be used to track the status of bulk operations.

Example:

{

"bulk_uuid": "b8b79af4-fe6a-4f8a-a6f3-76b6e95aeec8",

"request_items": {

"items": [

{

"id": 0,

"data_hash": null,

"status": "accepted"

}

]

}

}

Bulk API

Another big use-case of asynchronous APIs is when a third-party system sends a large number of entities of the same endpoint. Instead of invoking the endpoint multiple times, we provide a special type of API endpoint, called Bulk. It combines multiple entities of the same type as an array and uses it for the single API request. The endpoint handler splits the array into individual entities and writes them as separate messages to the Message Queue.

As we already used AsynchronousOperations module of Magento core, implementation of Bulk Api in this case is rather trivial. AsynchronousOperations are working with the queuing system out of the box, and they have methods that are able to accept bulk operations. The only thing that needed to be implemented was the correct handling of Web API requests. As a result of the implementation, we have new routes for bulk operations that look like this (example for /products route):

POST /async/bulk/V1/products

with body:

[{

"product": {...}

},

{

"product": {...}

}…. ]

The response contains a list of operations statuses

{

"bulk_uuid": “GENERATED UUID",

"request_items": {

"items": [

{

"id": 0,

"data_hash": null,

"status": "accepted"

},

{

"id": 1,

"data_hash": null,

"status": "accepted"

}

]

}

}

So now, any POST, PUT or DELETE request can be executed like a bulk operation.

Parameters in URL

Another question: what is the best way to handle placeholders for parameters inside endpoint URLs, such as this:

PUT /V1/product/:sku/options/:optionId

After several ideas and voting we came to the solution:

- Requests like

PUT /V1/product/:sku/options/:optionId

are converted so that parameters like “sku” and “optionId” are not required as input. - For Bulk API requests, the URL path is generated by replacing the colon “:” with the prefix “by”

Example:

PUT /async/bulk/V1/product/bySku/options/byOptionId

Status Endpoints

We’ve added several calls that allow you to track the operation status and get real operation responses in order to track the progress, detect errors, etc. These endpoints use the bulk UUID as the search parameter.

Get the Short Status

Returns back the status of the operation:

GET /V1/bulk/:bulkUuid/status

Response:

{

"operations_list": [

{

"id": 0,

"status": 0,

"result_message": "string",

"error_code": 0

}

],

"extension_attributes": {},

"bulk_id": "string",

"description": "string",

"start_time": "string",

"user_id": 0,

"operation_count": 0

}

Get the Detailed Status

Returns information about operations status and also a detailed response of each operation. (Field: “result_serialized_data”)

GET /V1/bulk/:bulkUuid/detailed-status

Response:

{

"operations_list": [

{

"id": 0,

"topic_name": "string",

"status": 0,

"result_serialized_data": "string",

"result_message": "string",

"error_code": 0

}

],

"extension_attributes": {},

"bulk_id": "string",

"description": "string",

"start_time": "string",

"user_id": 0,

"operation_count": 0

}

Swagger

A very important feature that we implemented was a Swagger schema for asynchronous operations. It generates the Swagger UI serves as a documentation for the asynchronous endpoints, outlying input and output types.

STEP 2: Planning and Implementation

Our contributors team in this project consists of companies in different time zones:

- comwrap GmbH, located in Frankfurt, Germany

- Balance Internet, located in Melbourne, Australia

- Magento Community Engineering, located in Austin, USA

Therefore, synchronizing all contributing parties wasn’t an easy job!

Eventually we found a suitable time slot that we used throughout the development phase. Meetings usually took place weekly, sometimes once every two weeks. The first few meetings were dedicated to prioritizing different features, backlog grooming, and estimating the work. It was important to understand the amount of work to be done so that we could finalize the scope of the feature and plan its release. At our sync-up meetings, we discussed what had already been completed, what problems we had, new ideas, how we can improve the product or the implementation, and what needs to be done next. The last meeting was dedicated to the demo of the functionality and you can find the recording here:

https://www.youtube.com/watch?v=i2iVMP0QzII

In the middle of the development, we had the chance to participate in one of Magento’s Contribution Days in the Ukraine, where we took the first steps of migrating the Magento Message Queue and the Rabbit MQ integration framework from Magento Commerce to Magento Open Source.

Step 3: Testing and release

And finally, after 3 months of development, we finished the project with 4 pull requests that covered all the functionalities described above.

It was our own goal to finish everything by the end of March, before the last day of the Magento 2.3 feature freeze. This posed a real challenge for us, since we had a lot of discussions concerning the architecture of the modules, the best ways to implement them, relevant tests coverage and so on. But in the end, we finished it in time. Our last pull request was merged one day before the cut-off.

I really appreciated the help the Magento team provided to us and want to give a big thanks to the Community Engineering team for their great support. With their help and advice, we managed to complete the project, including all the needed tests, and to release this new functionality. It will be available both in Magento 2.3 version.

Results

After 3 months of intense work, we can finally release an amazing new feature for Magento Open Source and Magento Commerce. That was a moment of happiness, since the feature we developed was not only valuable for us, but also to everyone with a similar use-case. We felt like a part of the big Magento Community.

There are many more things to do related to the bulk and asynchronous API. The backlog consists of the Redis framework for message statuses, strictly-type status messages, improvements to the message queue framework, and more. Please join us in the development of the next phase of this project!

For us, as developers at comwrap, it was a truly great experience working together as one big team. We have enjoyed participating in the Magento Contribution Days to improve our personal and general team skills as well as developing this new amazing feature that we can use for our clients in future.

From the Magento Community Engineering Team

We are constantly looking for new projects and proposals for features that you are interested in building that address issues you’ve encountered in your projects.

We have nice bounties for the Solution Partners as a part of our Contribution Program https://community.magento.com/t5/Magento-DevBlog/Partners-Contribution-Rewards-Q1-2018-Rankings-Anno... and moreover, we try to make it a fulfilling experience of improving the product for all the users.

There are multiple projects https://github.com/magento/community-features/projects/1 in the pipeline right now at different stages of the design or development. Write us in the Community Engineering Slack channel or at engcom@magento.com if you want to join one of them or propose a new one.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.