- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

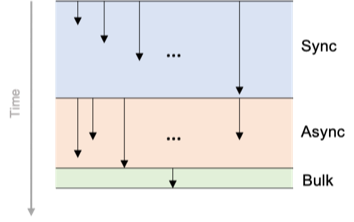

Thanks to the collaboration between comwrap GmbH, Balance Internet and the Magento Community Engineering team, Asynchronous / Bulk API functionality was first delivered in Magento 2.3.0 as a new API that makes possible the execution of operations asynchronously using RabbitMQ (which is delivered out of the box with Magento since version 2.3.0 as well). Details and history of Asynchronous / Bulk API are described in a previous DevBlog post by Oleksandr Lyzun. In short: a user sends the same synchronous request but in response only receives a bulk_uuid. This request goes into the RabbitMQ queue and consumers in Magento process them in order. Using this bulk_uuid, users can monitor the request processing. One big benefit of bulk operations is that users can send different operations using only one request (e.g. create or update list of products).

It Is All About Performance

One of the most important needs of our customers is speed. That is what motivated this investigation; we wanted to see if the implementation and realization of the new API are worth it and solve performance targets.

We created a script to run load tests and compare processing times for Synchronous, Asynchronous and Bulk API endpoints.

Attempt #1

Our first attempt at a load/performance test was using JMeter. With Jmeter we can easily send many requests to different endpoints (Sync, Async and Bulk).

Soon we realized that Jmeter is not flexible enough and we need a more algorithmic tool than just a performance test tool. We generated a .csv file with elapsed time, response code for every request and much more useless information 😊 – in the sense that:

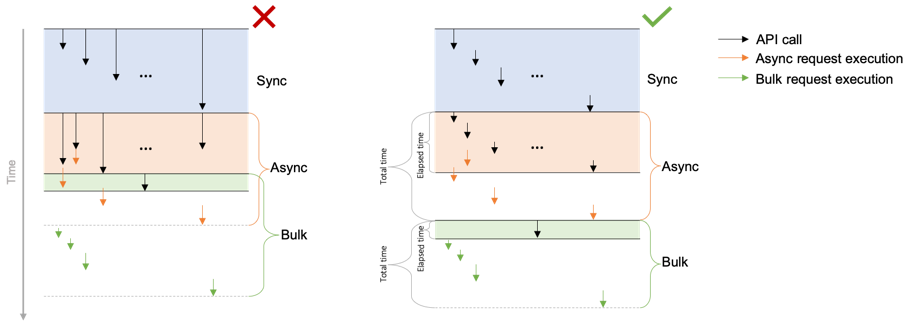

- We do not need elapsed time per request. What interests us is how much time is needed to import some number of items. Therefore what we were looking for is a summary per run (involving many items to be processed) rather than per request.

- Having only elapsed time gives us no information about total time. We had no information on how long asynchronous requests were waiting in the queue.

Finally, it was decided to start from scratch using Python. Unfortunately, after the first try we didn’t get any specific metrics or results we considered were thorough, based on that experience we understood several important points for a new test algorithm. So, back to work!

Attempt #2

The final version of the test consists of 2 steps:

- Send requests and save elapsed time (from here we can see how much time the system needs to accept messages and put them in the queue).

- Collect created items from the database and calculate total time elapsed, starting from request sending until the last item is created. We also calculate the percentage of successfully created items.

These steps are repeated a few times for every method in order to understand tendencies and to reduce the noise in results. We define a test “batch” as a run using one of the API endpoints and with some fixed size.

When dealing with asynchronous processes it is important to remember that a new batch must wait until the previous one is fully processed. That is, we must wait not only until all API requests are accepted but also until all items are created. In the case of Synchronous imports it is not necessary as at the time of the last response, the product creation process stops and server becomes "free". In the case of Asynchronous calls, once requests are accepted, all of our messages are queued in RabbitMQ - but are not yet executed. If a new test batch would start immediately, performance can be degraded, as most likely at the same time the server will be still busy creating items from the previous batch.

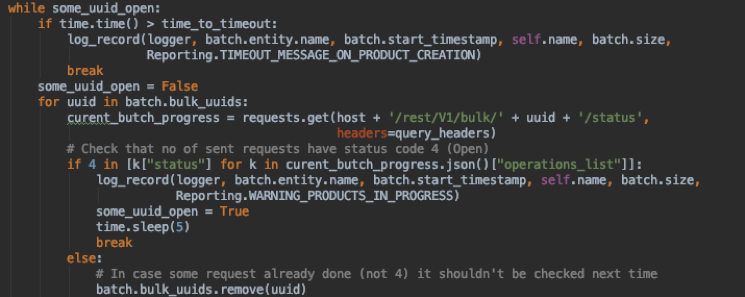

To check if all requests are processed, having stored bulk_uuids from our initial request (which would be a list of UUIDs for Async and only one for Bulk), we can get

GET /V1/bulk/:bulkUuid/status

In case there's some open requests, we wait for some time and only after processing of all request we can move on, collect results and send next batch of items.

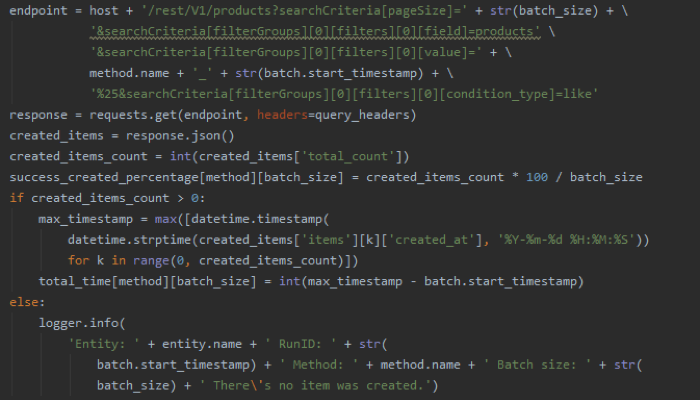

On the second step script requests created_at time using filter by specific SKUs (batch_id is included in product SKUs so that items from one run can be easily found). In the result total time counts as a difference between max(created_at) and saved time of the first sent request. The fastest option to get created_at time would be graphql request:

GET /graphql { products( filter: { sku: { like: "%_{{$batch_id}}_%" } } pageSize: 1000 currentPage: 1 sort: { name: DESC } ) { items { created_at } } }

But GraphQL request requires a reindex on each query so instead we are using the general search API:

Results

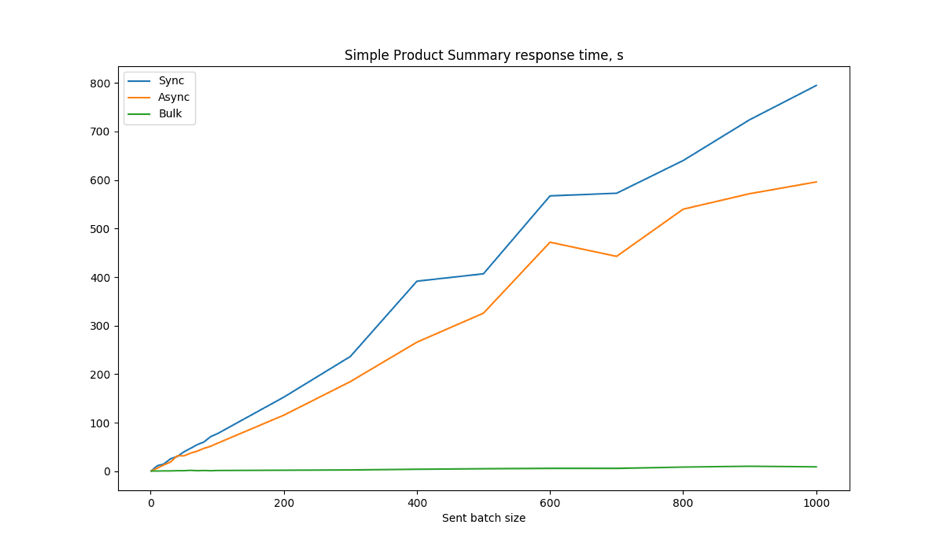

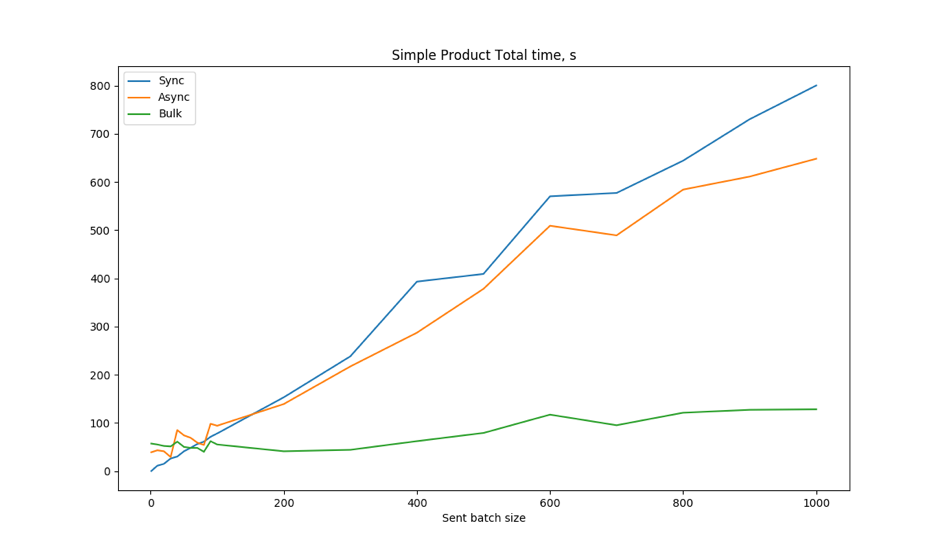

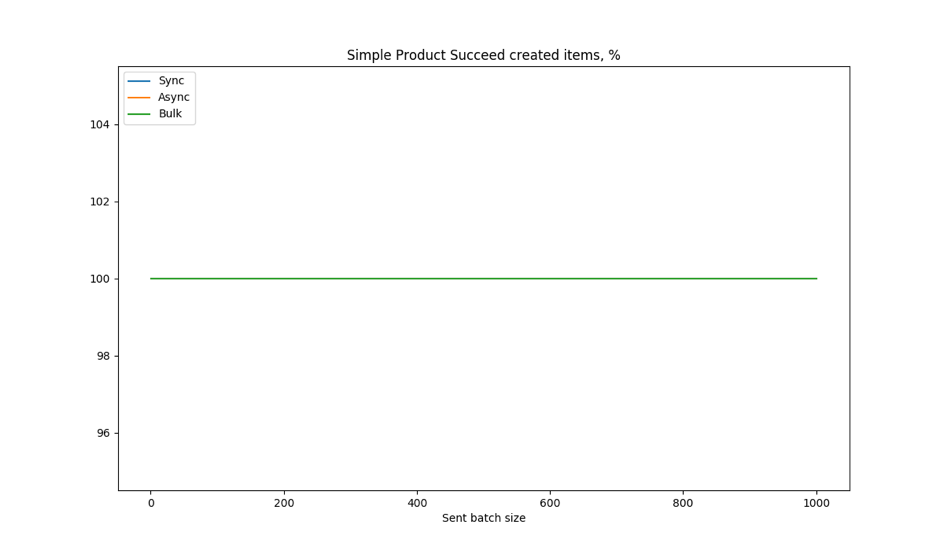

Using the previously mentioned script, which sends different amounts of products using Sync, Async and Bulk operations, we retrieved the following metrics:

- Elapsed time summarized for the entire batch

- Total time calculated as difference between last item creation and request start time

- Percentage of successfully created products

The server this test was executed against had the following specifications:

CPUs: 8

RAM: 128GB

Magento: 2.3.2

Results follow:

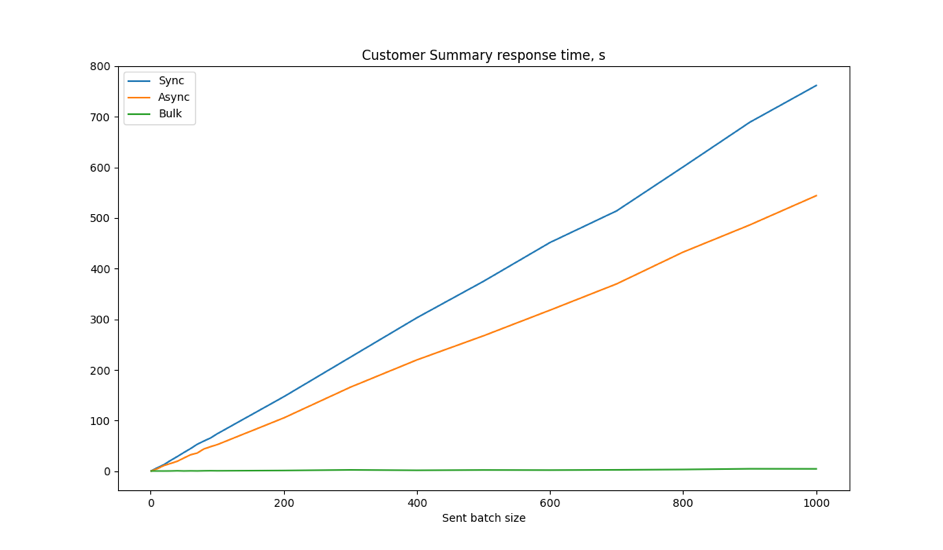

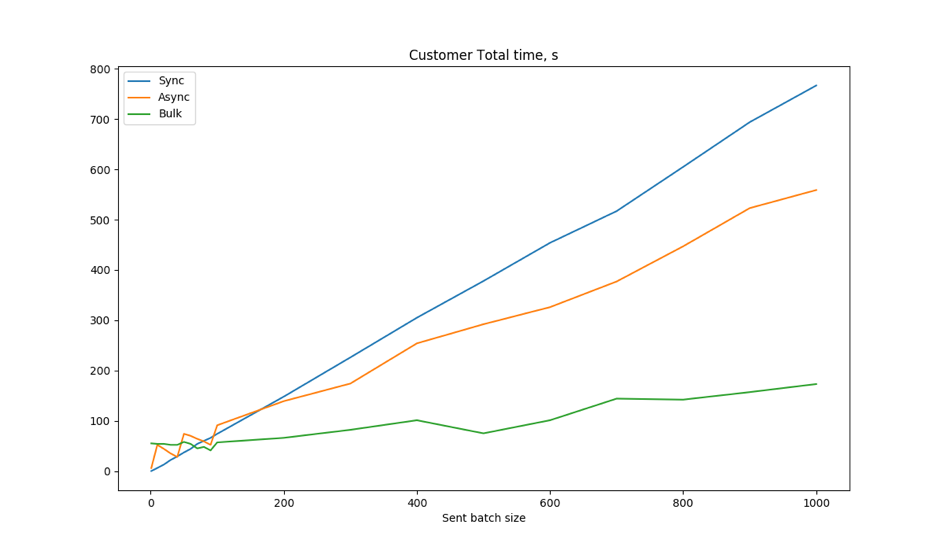

As our customers are doing imports of not just products, it was interesting to check performance on other objects. Test on customer creation shows very similar results:

So performance tests and results do not differentiate between types of data we want to import, cause new API implementation adding new layer of API only and do not interact with any specific objects.

Conclusions:

- As expected for Synchronous methods, elapsed time is equal to total, as user gets response only once item was created.

- Elapsed time of Asynchronous API is a bit less than Sync, as it saves time during item creation, but during each API request the system still needs to initialize the entire Magento instance.

- Thanks to the time saved in sending requests, the total time of Asynchronous is a bit less then Sync on big batches - but bigger on small batches. We improved by 19% on 1000 item batches but degraded by 20.5% on 100 item batches. Presumably the improvements reaped in big batches are yielded by the fact that the last requests are sent at the same time as processing of the first requests begins.

- Elapsed time of Bulk API is well-nigh constant, as it is almost independent from number of items. The only time consumption that queueing each item takes is writing an operation status into the database and queuing a message in RabbitMQ

- On big batches Bulk can be several times faster in total time. On our test server, sending 100 items, we found a 30% decrease in total time using Bulk comparing to Sync and 41.5% in comparison to Async. On 1000 simple products this benefit drastically increases up to 84% (Sync) and 80% (Async).

- On small batches (e.g. 1 product), the asynchronous methods (both Async and Bulk) show less efficiency than Sync, as they are using more resources and communications. Sometimes even a small batch sent via the Asynchronous methods can wait in the RabbitMQ Queue for some time before processing begins. Therefore on small batch sizes, total time does not correlate very much with batch size. But as we can see from the pictures above, the tendency is clear: the bigger the number of items, the higher the probability that, even though there may be some delay waiting in the queue, you will get a decreased overall processing.

So we can unequivocally conclude: implementing the Bulk API in Magento was a great idea, which was fully worth the time spent. Using the Bulk API you can get save a lot of processing time on big data transfers!

P.S. the link to Python script to check performance on your server can be found here.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.